A computer is a machine that manipulates data according to a set of instructions.

Although mechanical examples of computers have existed through much of recorded human history, the first electronic computers were developed in the mid-20th century (1940–1945). These were the size of a large room, consuming as much power as several hundred modern personal computers (PCs).[1] Modern computers based on integrated circuits are millions to billions of times more capable than the early machines, and occupy a fraction of the space.[2] Simple computers are small enough to fit into a wristwatch, and can be powered by a watch battery. Personal computers in their various forms are icons of the Information Age and are what most people think of as "computers". The embedded computers found in many devices from MP3 players to fighter aircraft and from toys to industrial robots are however the most numerous.

The ability to store and execute lists of instructions called programs makes computers extremely versatile, distinguishing them from calculators. The Church–Turing thesis is a mathematical statement of this versatility: any computer with a certain minimum capability is, in principle, capable of performing the same tasks that any other computer can perform. Therefore computers ranging from a mobile phone to a supercomputer are all able to perform the same computational tasks, given enough time and storage capacity.

History of computing

The first use of the word "computer" was recorded in 1613, referring to a person who carried out calculations, or computations, and the word continued to be used in that sense until the middle of the 20th century. From the end of the 19th century onwards though, the word began to take on its more familiar meaning, describing a machine that carries out computations.[3]

The history of the modern computer begins with two separate technologies—automated calculation and programmability—but no single device can be identified as the earliest computer, partly because of the inconsistent application of that term. Examples of early mechanical calculating devices include the abacus, the slide rule and arguably the astrolabe and the Antikythera mechanism (which dates from about 150–100 BC). Hero of Alexandria (c. 10–70 AD) built a mechanical theater which performed a play lasting 10 minutes and was operated by a complex system of ropes and drums that might be considered to be a means of deciding which parts of the mechanism performed which actions and when.[4] This is the essence of programmability.

The "castle clock", an astronomical clock invented by Al-Jazari in 1206, is considered to be the earliest programmable analog computer.[5] It displayed the zodiac, the solar and lunar orbits, a crescent moon-shaped pointer travelling across a gateway causing automatic doors to open every hour,[6][7] and five robotic musicians who played music when struck by levers operated by a camshaft attached to a water wheel. The length of day and night could be re-programmed to compensate for the changing lengths of day and night throughout the year.[5]

The Renaissance saw a re-invigoration of European mathematics and engineering. Wilhelm Schickard's 1623 device was the first of a number of mechanical calculators constructed by European engineers, but none fit the modern definition of a computer, because they could not be programmed.

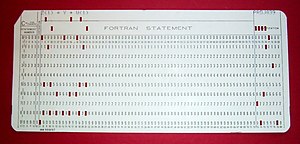

In 1801, Joseph Marie Jacquard made an improvement to the textile loom by introducing a series of punched paper cards as a template which allowed his loom to weave intricate patterns automatically. The resulting Jacquard loom was an important step in the development of computers because the use of punched cards to define woven patterns can be viewed as an early, albeit limited, form of programmability.

It was the fusion of automatic calculation with programmability that produced the first recognizable computers. In 1837, Charles Babbage was the first to conceptualize and design a fully programmable mechanical computer, his analytical engine.[8] Limited finances and Babbage's inability to resist tinkering with the design meant that the device was never completed.

In the late 1880s, Herman Hollerith invented the recording of data on a machine readable medium. Prior uses of machine readable media, above, had been for control, not data. "After some initial trials with paper tape, he settled on punched cards ..."[9] To process these punched cards he invented the tabulator, and the keypunch machines. These three inventions were the foundation of the modern information processing industry. Large-scale automated data processing of punched cards was performed for the 1890 United States Census by Hollerith's company, which later became the core of IBM. By the end of the 19th century a number of technologies that would later prove useful in the realization of practical computers had begun to appear: the punched card, Boolean algebra, the vacuum tube (thermionic valve) and the teleprinter.

During the first half of the 20th century, many scientific computing needs were met by increasingly sophisticated analog computers, which used a direct mechanical or electrical model of the problem as a basis for computation. However, these were not programmable and generally lacked the versatility and accuracy of modern digital computers.

Alan Turing is widely regarded to be the father of modern computer science. In 1936 Turing provided an influential formalisation of the concept of the algorithm and computation with the Turing machine. Of his role in the modern computer, Time Magazine in naming Turing one of the 100 most influential people of the 20th century, states: "The fact remains that everyone who taps at a keyboard, opening a spreadsheet or a word-processing program, is working on an incarnation of a Turing machine." [10]

George Stibitz is internationally recognized as a father of the modern digital computer. While working at Bell Labs in November 1937, Stibitz invented and built a relay-based calculator he dubbed the "Model K" (for "kitchen table", on which he had assembled it), which was the first to use binary circuits to perform an arithmetic operation. Later models added greater sophistication including complex arithmetic and programmability.[11]

Programs

In practical terms, a computer program may run from just a few instructions to many millions of instructions, as in a program for a word processor or a web browser. A typical modern computer can execute billions of instructions per second (gigahertz or GHz) and rarely make a mistake over many years of operation. Large computer programs consisting of several million instructions may take teams of programmers years to write, and due to the complexity of the task almost certainly contain errors.

Errors in computer programs are called "bugs". Bugs may be benign and not affect the usefulness of the program, or have only subtle effects. But in some cases they may cause the program to "hang"—become unresponsive to input such as mouse clicks or keystrokes, or to completely fail or "crash". Otherwise benign bugs may sometimes may be harnessed for malicious intent by an unscrupulous user writing an "exploit"—code designed to take advantage of a bug and disrupt a program's proper execution. Bugs are usually not the fault of the computer. Since computers merely execute the instructions they are given, bugs are nearly always the result of programmer error or an oversight made in the program's design.[16]

In most computers, individual instructions are stored as machine code with each instruction being given a unique number (its operation code or opcode for short). The command to add two numbers together would have one opcode, the command to multiply them would have a different opcode and so on. The simplest computers are able to perform any of a handful of different instructions; the more complex computers have several hundred to choose from—each with a unique numerical code. Since the computer's memory is able to store numbers, it can also store the instruction codes. This leads to the important fact that entire programs (which are just lists of instructions) can be represented as lists of numbers and can themselves be manipulated inside the computer just as if they were numeric data. The fundamental concept of storing programs in the computer's memory alongside the data they operate on is the crux of the von Neumann, or stored program, architecture. In some cases, a computer might store some or all of its program in memory that is kept separate from the data it operates on. This is called the Harvard architecture after the Harvard Mark I computer. Modern von Neumann computers display some traits of the Harvard architecture in their designs, such as in CPU caches.

While it is possible to write computer programs as long lists of numbers (machine language) and this technique was used with many early computers,[17] it is extremely tedious to do so in practice, especially for complicated programs. Instead, each basic instruction can be given a short name that is indicative of its function and easy to remember—a mnemonic such as ADD, SUB, MULT or JUMP. These mnemonics are collectively known as a computer's assembly language. Converting programs written in assembly language into something the computer can actually understand (machine language) is usually done by a computer program called an assembler. Machine languages and the assembly languages that represent them (collectively termed low-level programming languages) tend to be unique to a particular type of computer. For instance, an ARM architecture computer (such as may be found in a PDA or a hand-held videogame) cannot understand the machine language of an Intel Pentium or the AMD Athlon 64 computer that might be in a PC.[18]

Though considerably easier than in machine language, writing long programs in assembly language is often difficult and error prone. Therefore, most complicated programs are written in more abstract high-level programming languages that are able to express the needs of the programmer more conveniently (and thereby help reduce programmer error). High level languages are usually "compiled" into machine language (or sometimes into assembly language and then into machine language) using another computer program called a compiler.[19] Since high level languages are more abstract than assembly language, it is possible to use different compilers to translate the same high level language program into the machine language of many different types of computer. This is part of the means by which software like video games may be made available for different computer architectures such as personal computers and various video game consoles.

The task of developing large software systems presents a significant intellectual challenge. Producing software with an acceptably high reliability within a predictable schedule and budget has historically been difficult; the academic and professional discipline of software engineering concentrates specifically on this challenge.

Memory

A computer's memory can be viewed as a list of cells into which numbers can be placed or read. Each cell has a numbered "address" and can store a single number. The computer can be instructed to "put the number 123 into the cell numbered 1357" or to "add the number that is in cell 1357 to the number that is in cell 2468 and put the answer into cell 1595". The information stored in memory may represent practically anything. Letters, numbers, even computer instructions can be placed into memory with equal ease. Since the CPU does not differentiate between different types of information, it is the software's responsibility to give significance to what the memory sees as nothing but a series of numbers.

In almost all modern computers, each memory cell is set up to store binary numbers in groups of eight bits (called a byte). Each byte is able to represent 256 different numbers (2^8 = 256); either from 0 to 255 or -128 to +127. To store larger numbers, several consecutive bytes may be used (typically, two, four or eight). When negative numbers are required, they are usually stored in two's complement notation. Other arrangements are possible, but are usually not seen outside of specialized applications or historical contexts. A computer can store any kind of information in memory if it can be represented numerically. Modern computers have billions or even trillions of bytes of memory.

The CPU contains a special set of memory cells called registers that can be read and written to much more rapidly than the main memory area. There are typically between two and one hundred registers depending on the type of CPU. Registers are used for the most frequently needed data items to avoid having to access main memory every time data is needed. As data is constantly being worked on, reducing the need to access main memory (which is often slow compared to the ALU and control units) greatly increases the computer's speed.

Computer main memory comes in two principal varieties: random-access memory or RAM and read-only memory or ROM. RAM can be read and written to anytime the CPU commands it, but ROM is pre-loaded with data and software that never changes, so the CPU can only read from it. ROM is typically used to store the computer's initial start-up instructions. In general, the contents of RAM are erased when the power to the computer is turned off, but ROM retains its data indefinitely. In a PC, the ROM contains a specialized program called the BIOS that orchestrates loading the computer's operating system from the hard disk drive into RAM whenever the computer is turned on or reset. In embedded computers, which frequently do not have disk drives, all of the required software may be stored in ROM. Software stored in ROM is often called firmware, because it is notionally more like hardware than software. Flash memory blurs the distinction between ROM and RAM, as it retains its data when turned off but is also rewritable. It is typically much slower than conventional ROM and RAM however, so its use is restricted to applications where high speed is unnecessary.[24]

In more sophisticated computers there may be one or more RAM cache memories which are slower than registers but faster than main memory. Generally computers with this sort of cache are designed to move frequently needed data into the cache automatically, often without the need for any intervention on the programmer's part.

Networking and the Internet

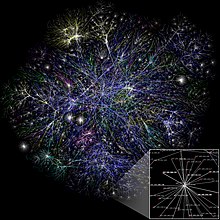

Computers have been used to coordinate information between multiple locations since the 1950s. The U.S. military's SAGE system was the first large-scale example of such a system, which led to a number of special-purpose commercial systems like Sabre.[30]

In the 1970s, computer engineers at research institutions throughout the United States began to link their computers together using telecommunications technology. This effort was funded by ARPA (now DARPA), and the computer network that it produced was called the ARPANET.[31] The technologies that made the Arpanet possible spread and evolved.

In time, the network spread beyond academic and military institutions and became known as the Internet. The emergence of networking involved a redefinition of the nature and boundaries of the computer. Computer operating systems and applications were modified to include the ability to define and access the resources of other computers on the network, such as peripheral devices, stored information, and the like, as extensions of the resources of an individual computer. Initially these facilities were available primarily to people working in high-tech environments, but in the 1990s the spread of applications like e-mail and the World Wide Web, combined with the development of cheap, fast networking technologies like Ethernet and ADSL saw computer networking become almost ubiquitous. In fact, the number of computers that are networked is growing phenomenally. A very large proportion of personal computers regularly connect to the Internet to communicate and receive information. "Wireless" networking, often utilizing mobile phone networks, has meant networking is becoming increasingly ubiquitous even in mobile computing environments.

600's bc?

The abacus is developed in China. It was later adopted by the Japanese and the Russians.

600's ad?

Arabic numbers -- including the zero (represented by a dot) -- were invented in India. Arabic translations of Indian math texts brought these numbers to the attention of the Europeans. Arabic numbers entered Europe by means of Spain around 1000 ad and first became popular among Italian merchants around 1300. Until then, people used the Roman system in western Europe, and the Greek system in the east. The original numbers were similar to the modern Devanagari numbers used in northern India:

1488

The moveable-type printing press is invented by Johann Gutenburg.

1492

Francis Pellos of Nice invents the decimal point.

c. 1600

Thomas Harriot invents the symbols used in algebra. He also drew the first maps of the moon and discovered sunspots.

1600

Dr. William Gilbert discovers static electricity, and coins the term in De Magnete.

1614

John Napier invents logarithms.

1622

William Oughtred invents the slide rule.

1623

Wilhelm Schickard makes his "Calculating Clock."

1644-5

Blaise Pascal a young French mathematician develops the Pascaline, a simple mechanical device for the addition of numbers. It consists of several toothed wheels arranged side by side, each marked from 0 to 9 at equal intervals around its perimeter. The important innovation is an automatic 'tens-carrying' operation: when a wheel completes a revolution, it is turned past the 9 to 0 and automatically pulls the adjacent wheel on its left, forward one tenth of a revolution, thus adding, or 'carrying'. (Pascal is also a respected philosopher and the inventor of the bus.)

1660

Otto von Gürcke builds first "electric machine."

1674

Gottfried Wilhelm von Leibniz designs his "Stepped Reckoner", a machine similar to Pascal's, with the added features of multiplication and division, which is constructed by a man named Olivier, of Paris. (Leibniz is also a respected philosopher and the co-inventor of calculus.)

1752

Ben Franklin captures lightning.

1786

J. H. Mueller, of the Hessian army, conceives the idea of what came to be called a "difference engine". That's a special-purpose calculator for tabulating values of a polynomial. Mueller's attempt to raise funds fails and the project is forgotten.

1790

Galvani discovers electric current, and uses it on frogs' legs.

1800

Alessandro Volta invents the battery.

1801

Joseph-Marie Jacquard develops the punch card system which programs and thereby automates the weaving of patterns on looms.

1809

Sir Humphry Davey invents electric arc lamp.

1820

Charles Xavier Thomas de Colmar of France, makes his "Arithmometer", the first mass-produced calculator. It does multiplication using the same general approach as Leibniz's calculator; with assistance from the user it can also do division. It is also the most reliable calculator yet. Machines of this general design, large enough to occupy most of a desktop, continue to be sold for about 90 years.

1822-23

Charles Babbage begins his government-funded project to build the first of his machines, the "Difference Engine", to mechanize solutions to general algebra problems.

The importance of his work is recognized by Ada Lovelace, Lord Byron's daughter who, gifted in mathematics, devises a form of binary arithmetic which uses only the digits 1 and 0.

1825

The first railway is opened for public use.

1826

Photography is invented by Benoit Fourneyron.

1830

Thomas Davenport of Vermont invents the electric motor -- calls it a toy.

1831

Michael Faraday produces electricity with the first generator.

1832-34

Babbage conceives, and begins to design, his "Analytical Engine". Could be considered a programmable calculator, very close to the basic idea of a computer. The machine could do an addition in 3 seconds and a multiplication or division in 2-4 minutes.

1837

Telegraph, Samuel F. B. Morse.

1868

Christopher Latham Sholes (Milwaukee) invents the first commercial typewriter.

1872

One of the first large-scale analog computers is developed by Lord Kelvin to predict the height of tides in English harbors.

1876

Telephone is invented by Alexander Graham Bell.

1877

Gramaphone is invented by Thomas Edison.

1881

Charles S. Tainter invents the dictaphone.

1886

Dorr E. Felt of Chicago, makes his "Comptometer". This is the first calculator with keys.

1887

E. J. Marey invents the Motion Picture Camera.

Eastman patents the first box camera, moving photography from the hands of professionals to the general public.

1890

Herman Hollerith of MIT, designs a punch card tabulating machine which is used effectively in the US census of this year. The cards are read electrically.

1891

Thomas Edison develops the Motion Picture Projector. 1896 Guglielmo Marconi develops the Radio Telegraph. 1899 Val Demar Poulsen develops the Magnetic Recorder.

1900

Rene Graphen develops the Photocopying Machine.

1901

Reginald A. Fessenden develops the Radio Telephone.

1906

Henry Babbage, Charles's son, with the help of the firm of R. W. Munro, completes his father's Analytical Engine, just to show that it would have worked.

1913

Thomas Edison invents Talking Motion Pictures.

1919

W. H. Eccles and F. W. Jordan publish the first flip-flop circuit design.

1924

Computing-Tabulating-Recording becomes International Business Machines.

1925

J. P. Maxfield develops the All-electric Phonograph.

1927

Philo T. Farnsworth, inventor of the television, gives first demonstration. See The Last Lone Inventor by Evan Schwartz (http://www.lastloneinventor.com)

1933

IBM introduces the first commercial electric typewriter.

Edwin H. Armstrong develops FM Radio.

1936

Robert A. Watson-Watt develops Radar.

Benjamin Burack builds the first electric logic machine.

In his thesis, Claude Shannon demonstrates the relationship between electrical circuitry and symbolic logic.

1937

Alan M. Turing, of Cambridge University, England, publishes a paper on "computable numbers" which introduces the theoretical simplified computer known today as a Turing machine.

1938

Claude E. Shannon publishes a paper on the implementation of symbolic logic using relays.

1939

John V. Atanasoff and graduate student Clifford Berry, of Iowa State College complete a prototype 16-bit adder. This is the first machine to calculate using vacuum tubes.

1940s

First electronic computers in US, UK, and Germany

1941

Working with limited backing from the German Aeronautical Research Institute, Zuse completes the "V3", the first operational programmable calculator. Zuse is a friend of Wernher von Braun

1943

Howard H. Aiken and his team at Harvard University, Cambridge, Mass. funded by IBM, complete the "ASCC Mark I" ("Automatic Sequence-Controlled Calculator Mark I"). The machine is 51 feet long, 8 feet high, weighs 5 tons, and incorporates 750,000 parts. It is the first binary computer built in the U.S. that is operated by electricity.

Max Newman, Wynn-Williams, and their team at the secret English Government Code and Cypher School, complete the "Heath Robinson". This is a specialized machine for cipher-breaking. (Heath Robinson was a British cartoonist known for his Rube-Goldberg-style contraptions.)

1945

John von Neumann drafts a report describing a stored-program computer, and gives rise to the term "von Neumann computer".

1945

John W. Mauchly and J. Presper Eckert and their team at the University of Pennsylvania, complete a secret project for the US Army's Ballistics Research Lab: The ENIAC (Electronic Numerical Integrator and Calculator). It weighs 30 tons, is 18 feet high and 80 feet long, covers about 1000 square feet of floor, and consumes 130 or 140 kilowatts of electricity. Containing 17,468 vacuum tubes and over 500,000 soldered connections, it costs $487,000. While it could perform five thousand additions in one second, the circuitry in ENIAC could now be contained on a panel the size of a playing card. Today’s desktop stores millions times more info and is 50,000 times faster. The ENIAC's clock speed is 100 kHz.

Two days before Christmas the transistor is perfected.

1946

Zuse invents Plankalkul, the first programming language, while hiding out in Bavaria.

The ENIAC is revealed to the public. A panel of lights is added to help show reporters how fast the machine is and what it is doing; and apparently Hollywood takes note.

1947

The magnetic drum memory is independently invented by several people, and the first examples are constructed.

1948

Newman, Freddie C. Williams, and their team at Manchester University, complete a prototype machine, the "Manchester Mark I". This is the first machine that everyone would call a computer, because it's the first with a true stored-program capability.

First tape recorder is sold

1949

A quote from Popular Mechanics:

“Where a computer like the ENIAC is equipped with 18,000 vacuum tubes and weighs 30 tons, computers in the future may have only 1,000 vacuum tubes and weigh only 1 1/2 tons.”

Jay W. Forrester and his team at MIT construct the "Whirlwind" for the US Navy's Office of Research and Inventions. The Whirlwind is the first computer designed for real-time work; it can do 500,000 additions or 50,000 multiplications per second. This allows the machine to be used for air traffic control.

Forrester conceives the idea of magnetic core memory as it is to become commonly used, with a grid of wires used to address the cores.

1950

Alan Turing "Computing Machinery and Intelligence"

1951

U.S. Census Bureau takes delivery of the first UNIVACS originally developed by Eckert and Mauchly.

An Wang establishes Wang Laboratories

Ferranti Ltd. completes the first commercial computer. It has 256 40-bit words of main memory and 16K words of drum. An eventual total of 8 of these machines are sold.

Grace Murray Hopper, of Remington Rand, invents the modern concept of the compiler.

1952

The EDVAC is finally completed. It has 4000 tubes, 10,000 crystal diodes, and 1024 44-bit words of ultrasonic memory. Its clock speed is 1 MHz.

1953

Minsky and McCarthy get summer jobs at Bell Labs

1955

An Wang is issued Patent Number 2,708,722, including 34 claims for the magnetic memory core.

Shockley Semiconductor is founded in Palo Alto.

John Bardeen, Walter Brattain, and William Shockley share the Nobel Prize in physics for the transistor.

1956

Rockefeller funds Minsky and McCarthy's AI conference at Dartmouth

CIA funds GAT machine-translation project.

Newell, Shaw, and Simon develop Logic Theorist.

1957

USSR launches Sputnik, the first earth satellite.

Newell, Shaw, and Simon develop General Problem Solver.

Fortran, the first popular programming language, hits the streets.

1958

McCarthy creates first LISP.

1959

Minsky and McCarthy establish MIT AI Lab.

Frank Rosenblatt introduces Perceptrons.

COBOL, a programming language for business use, and LISP, the first string processing language, come out.

1960s

Edward Djikstra suggests that software and data should be created in standard, structured forms, so that people could build on each others' work.

Algol 60, a European programming language and ancestor of many others, including Pascal, is released.

1962

First industrial robots. (For interesting modern examples, see the robotic palletizer.)

1963-64

Doug Englebart invents the computer mouse, first called the X-Y Position Indicator.

1964

Bobrow's "Student" solves math word-problems.

John Kemeny and Thomas Kurtz of Dartmouth College develop the first BASIC programming language. PL1 comes out out the same year.

Wang introduces the LOCI (logarithmic calculating instrument), a desktop calculator at the bargain price of $6700, much less than the cost of a mainframe. In six months, Wang sells about twenty units.

Sabre database system, brought online. It solves the American Airlines' problem of coordinating information about hundreds of flight reservations across the continent every day.

Philips makes public the compact cassette.

1966

Weizenbaum and Colby create ELIZA.

Hewlett-Packard enters the computer market with the HP2116A real-time computer. It is designed to crunch data acquired from electronic test and measurement instruments. It has 8K of memory and costs $30,000.

Hewlett-Packard announces their HP 9100 series calculator with CRT displays selling for about $5000 each.

Intel is founded and begins marketing a semiconductor chip that holds 2,000 bits of memory. Wang is the first to buy this chip, using it in their business oriented calculators called the 600 series.

Late 1960s

IBM sells over 30,000 mainframe computers based on the 360 family which uses core memory.

1967

Greenblatt's MacHack defeats Hubert Deyfus at chess.

IBM builds the first floppy disk

1969

Kubrick's "2001" introduces AI to mass audience.

Intel announces a 1 KB RAM chip, which has a significantly larger capacity than any previously produced memory chip

Unix operating system, characterised by multitasking (also called time-sharing), virtual memory, multi-user design and security, designed by Ken Thompson and Dennis Ritchie at AT&T Bell Laboratories, USA

ARPANET (future Internet) links first two computers at UCLA and Stanford Research Institute. Dr. Leonard Kleinrock, a UCLA-based pioneer of Internet technology, and his assistant Charley Kline manage to send succesfully, after solving an initial problem with an inadequate memory buffer, a command "login" to a Stanford machine set-up and tuned by Bill Duvall. First email!

(UCLA, UCSB, University of Utah and SRI are the four original members of Arpanet.)

1970s

Commodore, a Canadian electronics company, moves from Toronto to Silicon Valley and begins selling calculators assembled around a Texas Instruments chip.

1970

Doug Englebart patents his X-Y Position Indicator mouse.

Nicklaus Wirth comes out with Pascal.

1971

The price of the Wang Model 300 series calculator drops to $600. Wang introduces the 1200 Word Processing System.

Stephen Wozniak and Bill Fernandez build their "Cream Soda computer.”

Bowmar Instruments Corporation introduces the LSI-based (large scale integration) four function (+, -, *, /) pocket calculator with LED at an initial price of $250.

Intel markets the first microprocessor. Its speed is 60,000 'additions' per second.

1972

Ray Tomlinson, author of first email software, chooses @ sign for email addresses.

Dennis Ritchie invents C.

Bill Gates and Paul Allen form Traf-O-Data (which eventually becomes Microsoft).

Stephen Wozniak and Steven Jobs begin selling blue boxes.

Electronic mail!

1973

Stephen Wozniak joins Hewlett-Packard.

Radio Electronics publishes an article by Don Lancaster describing a "TV Typewriter.”

IBM develops the first true sealed hard disk drive. The drive was called the "Winchester" after the rifle of the same name. It used two 30 Mb platters.

1975

MITS introduces the first personal computer - Altair in form of a kit, initially to be assembled by a buyer. It was based on Intel's 8-bit 8080 processor and included 256 bytes of memory (expandable to a 12 Kb), a set of toggle switches and an LED panel. Keyboard, screen or storage device could be added using extension cards.

The Apple I....

1976

Greenblatt creates first LISP machine.

Queen Elizabeth is first head of state to send email.

Shugart introduces 5.25" floppy.

IBM introduces a total information processing system. The system includes diskette storage, magnetic card reader/recorder, and CRT. The print station contains an ink jet printer, automatic paper and envelope feeder, and optional electronic communication.

Apple Computer opens its first offices in Cupertino and introduces the Apple II. It is the first personal computer with color graphics. It has a 6502 CPU, 4KB RAM, 16KB ROM, keyboard, 8-slot motherboard, game paddles, and built-in BASIC.

Commodore introduces the PET computer.

Tandy/Radio Shack announces its first TRS-80 microcomputer.

Ink-jet printing announced by IBM.

JVC introduces the VHS format to the videorecorders.

1977

The first digital audio disc prototypes are shown by Mitsubishi,Sony, and Hitachi at the Tokyo Audio fair.

1978

Apple introduces and begins shipping disk drives for the Apple II and initiates the LISA research and development project.

BITNET (Because It's Time Network) protocol for electronic mail, listserv servers, file transfer, is established as a cooperative enterprise by the City University of New York and Yale University.

Xerox releases the 8010 Star and 820 computers.

IBM announces its Personal Computer.

DEC announces a line of personal computers.

HP introduces the HP 9000 technical computer with 32-bit "superchip" technology - it is the first "desktop mainframe", as powerful as room-sized computers of the 1960s.

1979

Kevin MacKenzie invents the emoticon :-)

Usenet news groups.

1980

First AAAI conference at Stanford.

Telnet. Remote log-in and long-distance work (telecommuting) are now possible.

1981

Listserv mailing list software. Online knowledge-groups and virtual seminars are formed.

Osborne introduces first portable computer.

MS-DOS introduced.

1982

CD disk (12 cm, 74 mins of playing time) and player released by Sony and Philips Europe and Japan. A year later the CD technolgy is introduced to the USA

1983

IBM announces the PCjr.

Apple Computer announces Lisa, the first business computer with a graphical user interface launched by Apple Computer Inc., Cupertino, California. The computer has 5MHz 68000 CPU, 860KB 5.25" floppy, 12" B&W screen, detached keyboard, and mouse.

1984

Macintosh personal computer, launched by Apple Computer Inc. The first computer has 128KB of memory and a 3.5" 400KB floppy disk-drive. The OS with astounding graphic interface is bundled with MacWrite (wordprocessor) and MacPaint (free-hand, B&W drawing) software.

Apple introduces 3.5" floppy.

The domain name system is established.

1985

CD-ROM technology (disk and drive) for computers developed by Sony and Philips

File Transfer Protocol.

1987

Microsoft ships Windows 1.01.

1988

The 386 chip brings PC speeds into competition with LISP machines.

1989

Tim Berners-Lee invents the WWW while working at CERN, the European Particle Physics Laboratory in Geneva, Switzerland. He won the Finnish Technology Award Foundation's first Millennium Technology Prize in April of 2004. The $1.2 million prize was presented by Tarja Halonen, president of Finland.

1990

Archie FTP semi-crawler search engine, built by Peter Deutsch of MacGill University.

1991

CD-recordable (CD-R) technology is released.

WAIS publisher-fed search engine, invented by Brewster Kahle of the Thinking Machines Co.

Gopher, created at University of Minnesota Microcomputer, Workstations & Networks Center.

WWW server combines URL (addressing) syntax, HTML (markup) language for documents, and HTTP (communications protocol). It also offers integration of earlier Internet tools into a seamless whole.

Senator Al Gore introduced the High Performance Computing and Communication Act (known as the Gore Bill) to promote the development and use of the internet. Among other things, it funded the development of Mosaic in 1993. After a 1999 inteview, his political opponents misquoted him as claiming to have invented the internet.

1992

There are about 20 Web servers in existence (Ciolek 1998).

1993

"Universal Multiple-Octet Coded Character Set" (UCS), aka ISO/IEC 10646 is published in 1993 by the International Organization for Standardization (ISO). It is the first officially standardized coded character set with the purpose to eventually include all characters used in all the written languages in the world (and, in addition, all mathematical and other symbols).

Mosaic graphic WWW browser developed by Marc Andreessen (Cailliau 1995). Graphics user interface makes WWW finally a competitor to Gopher. Production of web pages becomes an easy task, even to an amateur.

There are 200+ Web servers in existence (Ciolek 1998).

1994

Labyrinth graphic 3-D (vrml) WWW browser is built by Mark Pesce. It provides access to the virtual reality of three-dimensional objects (artifacts, buildings, landscapes).

Netscape WWW browser, developed by Marc Andreessen, Mountain View, California.

1995

RealAudio narrowcasting (Reid 1997:69).

Java programming language, developed by Sun Microsystems, Palo Alto, California. Client-side, on-the-fly supplementary data processing can be performed using safe, downloadable micro-programs (applets).

Metacrawler WWW meta-search engine. The content of WWW is actively and automatically catalogued.

The first online bookstore, Amazon.com, is launched in Seattle by Jeffrey P. Bezos.

Altavista WWW crawler search engine is built by Digital around the Digital Alpha processor. A very fast search of 30-50% of the WWW is made possible).

1996

Google began as a research project by Larry Page and Sergey Brin while they were students at Stanford University

There are 100,000 Web servers in existence.

1997

There are 650,000 Web servers in existence.

“Deep Blue 2” beats Kasparov, the best chess player in the world. The world as we know it ends.

DVD technology (players and movies) is released. A DVD-recordable standard is created (Alpeda 1998).

Web TV introduced.

1998

Kevin Warwick, Professor of Cybernetics at the University of Reading in the U.K., became the first human to host a microchip. The approximately 23mm-by-3mm glass capsule containing several microprocessors stayed in Warwick's left arm for nine days. It was used to test implant's interaction with computer controlled doors and lights in a futuristic 'intelligent office building'

There are 3.6 mln Web servers in existence (Zakon 1998).

1999

There are 4.3 mln Web servers in existence (Zakon 1999).

Netomat: The Non-Linear Browser, by the New York artist Maciej Wisniewski, launched. The open-source software uses Java and XML technology to navigate the web in terms of the data (text, images and sounds) it contains, as opposed to traditional browsers (Mosaic, Lynx, Netscape, Explorer) which navigate the web's pages.

1999/2000

A global TV programme '2000Today' reports live for 25 hrs non-stop the New Year celebrations in 68 countries all over the world. It is the first ever show of that duration and geographical coverage. The programme involved a round-the-clock work of over 6000 technical personnel, and used a array of 60 communication satellites to reach 1 billion viewers from all time-zones all over the globe (The Canberra Times, 1 Jan, 2000).

Wikipedia begins as an offshoot of Nupedia by Jimmy Wales and Larry Sanger.

THE BASIC PARTS OF A COMPUTER

thank yOu to my ReFerence:

www.yahoo.com

Wikipedia-a free encyclopedia

No comments:

Post a Comment